Process github Workflow Events with AWS Stepfunctions

- Stephen Jones

- Aws

- March 9, 2023

Table of Contents

This is the next part of integrating github Enterprise Managed User events into the AWS Serverless ecosystem.

Catch up on the first part here!

With our AWS EventBridge setup and receiving events from github, let’s dive into the workflow_job event type.

The Goal

One of the many benefits of running in github.com is using managed runners. Each month you get a balance of hours included with the licensing; however, this is across the whole instance.

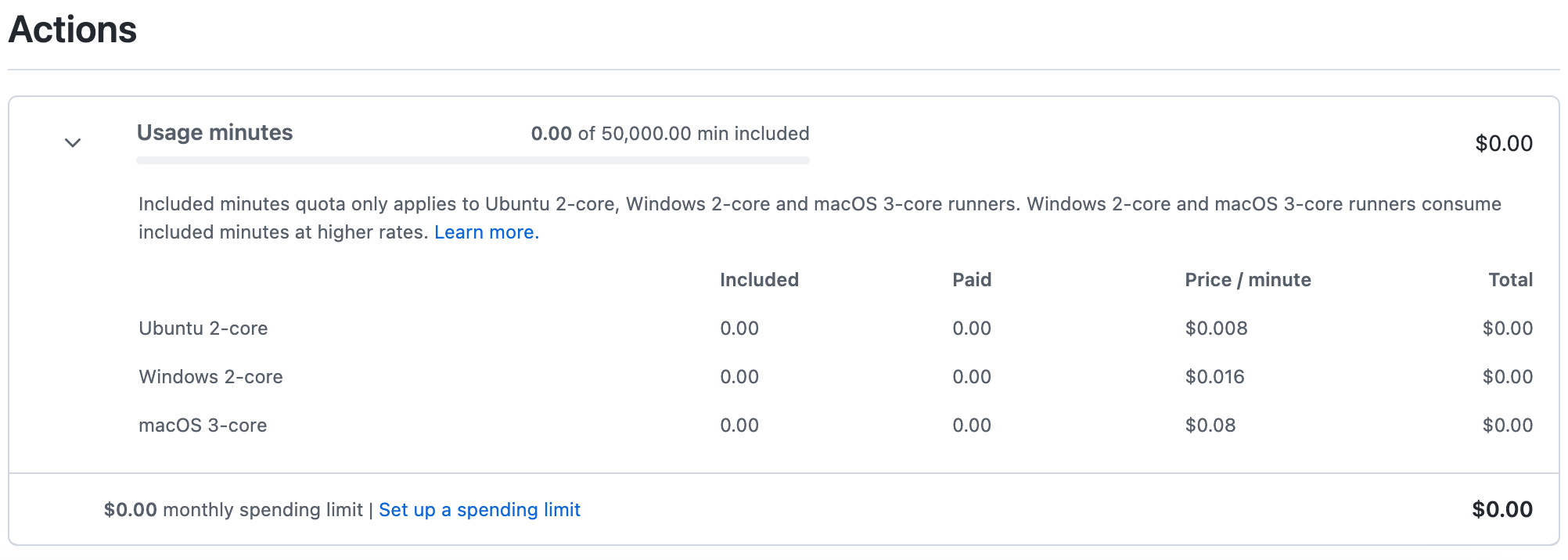

Pricing

Now don’t get me wrong, this is great, but as each organization within the github.com instance will have different requirements, first, I’m going to run out of hours, and when I do, I’ll need to charge accordingly. Another challenge is the spending limit is again for the whole instance.

If you’ve been following along, you will know I’m a fan of Self-Hosted Runners, which I describe in the following posts:-

We have our Self-hosted Runners set up, so all we need is to build a method to report on the:-

- Workflow events initiated by a user or organization

- Hours of compute time from the AWS Spot Instances

Enter the power of StepFunctions and the native SDK integrations

Let’s build it

Firstly we need to set up a trigger for our StepFunction.

The rule is very simple and looks like this. We can get more complex later if we need to.

{

"detail-type": ["workflow_job"]

}

With a little bit of iam config to grant the states:StartExecution action on our StepFunction, every time EventBridge receives the workflow_job event it will kick off an execution of the StepFunction.

The StepFunction

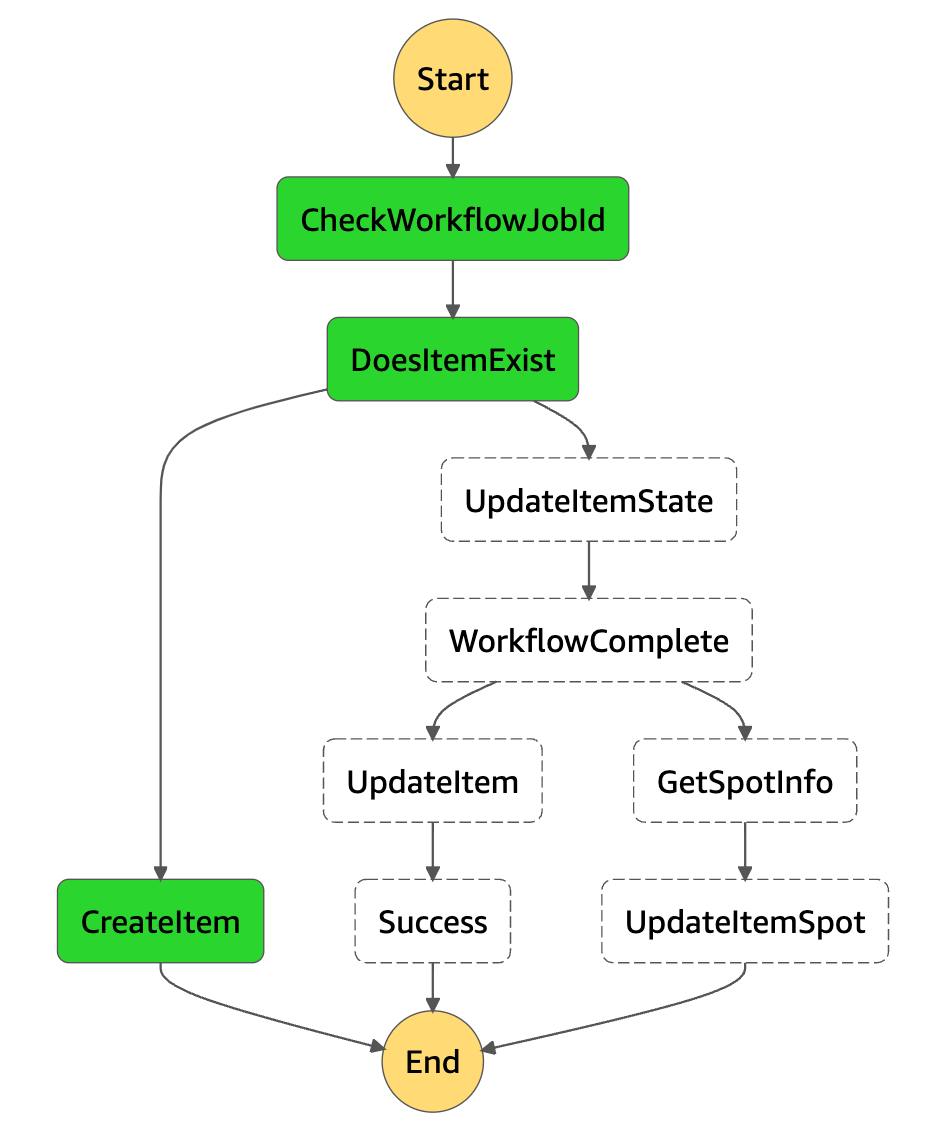

The following diagram shows the definition of the StepFunction that will do the magic using the native SDK integrations detailed here.

NOTE:- No lambdas here, no code to manage apart from the StepFunction definition!

Let’s walk through the flow of each step

CheckWorkflowJobId

When we receive a message via our EventBridge rule, we need to check if we have an Item in the DynamoDb table for the workflow_job id received.

Thankfully each workflow_job id is unique, so this is very easy.

This is one of the beautiful things about StepFunctions, as there will be a separate execution for each event received; I don’t need to handle any ordering issues.

{

"StartAt": "CheckWorkflowJobId",

"States": {

"CheckWorkflowJobId": {

"Next": "DoesItemExist",

"Parameters": {

"ExpressionAttributeValues": {

":id": {

"S.$": "States.Format('{}', $.detail.workflow_job.id)"

}

},

"KeyConditionExpression": "id = :id",

"TableName": "github-events-cfn"

},

"Resource": "arn:aws:states:::aws-sdk:dynamodb:query",

"ResultPath": "$.CheckWorkflowJobId",

"Type": "Task"

}

DoesItemExist

Next, we have a choice to make. If we don’t have an Item in our DynamoDb table, we must create one using the workflow_job id from the message received.

If we already have an Item in our DynamoDb table, we move on to the good stuff.

"DoesItemExist": {

"Choices": [

{

"Next": "CreateItem",

"NumericEquals": 0,

"Variable": "$.CheckWorkflowJobId.Count"

}

],

"Default": "UpdateItemState",

"Type": "Choice"

},

CreateItem

This step uses a simple schema to create the structure for our DynamoDb Item per workflow_job id.

{

"id": <Unique workflow_job id>

"completed_at": <workflow_job completed_at timestamp>

"github_organization": <github Organisation Name>

"github_repository": <github Repository Name>

"github_sender": <github User Name of requestor>

"runner_name": <EC2 Spot Instance Id>

"spot_price": <SPOT Price for Request>

"started_at": <workflow_job started_at timestamp>

"type": <Type of workflow>

"workflow_name": <Workflow name in github>

"workflow_status": <Status of the workflow>

}

"CreateItem": {

"Comment": "Send the github workflow_job event information for our DynamoDB item",

"End": true,

"Parameters": {

"Item": {

"completed_at": null,

"conclusion.$": "$.detail.workflow_job.conclusion",

"github_organization.$": "$.detail.organization.login",

"github_repository.$": "$.detail.repository.name",

"github_sender.$": "$.detail.sender.login",

"id.$": "States.Format('{}', $.detail.workflow_job.id)",

"runner_name.$": "$.detail.workflow_job.runner_name",

"spot_price": null,

"started_at.$": "$.time",

"type.$": "$.detail-type",

"workflow_name.$": "$.detail.workflow_job.name",

"workflow_status.$": "$.detail.workflow_job.status"

},

"TableName": "github-events-cfn"

},

"Resource": "arn:aws:states:::dynamodb:putItem",

"Type": "Task"

}

We pull the start time from the event so that we can track the full execution time of the workflow.

Now let’s walk through the flow when an Item exists.

UpdateItemState

When we receive a message and detect an existing workflow_job id, we must first update the workflow status. This can be one of queued, in_progress or complete.

If the workflow status is complete we update the Item with the final timestamp of the event in UpdateItem and move to success.

"UpdateItemState": {

"Next": "WorkflowComplete",

"Parameters": {

"ExpressionAttributeValues": {

":workflow_status": {

"S.$": "$.detail.workflow_job.status"

}

},

"Key": {

"id": {

"S.$": "States.Format('{}', $.detail.workflow_job.id)"

}

},

"TableName": "github-events-cfn",

"UpdateExpression": "SET workflow_status = :workflow_status"

},

"Resource": "arn:aws:states:::dynamodb:updateItem",

"ResultPath": "$.UpdateItemState",

"Type": "Task"

}

If the workflow is in any other state, we move to retrieve the metadata of the SPOT instance and update the Item.

GetSpotInfo

Using the runner-name from the workflow_job event, which is the EC2 instance name, we query the SPOT request using the describeSpotInstanceRequests SDK integration.

This gives us the SPOT price agreed upon when the instance was created.

"GetSpotInfo": {

"Comment": "Get the info of price for the SPOT instance for chargeback",

"Next": "UpdateItemSpot",

"Parameters": {

"Filters": [

{

"Name": "instance-id",

"Values.$": "States.StringSplit($.detail.workflow_job.runner_name, '')"

}

]

},

"Resource": "arn:aws:states:::aws-sdk:ec2:describeSpotInstanceRequests",

"ResultPath": "$.GetSpotInfo",

"Type": "Task"

}

UpdateItemSpot

Finally, we update all the good info about our spot instance.

"UpdateItemSpot": {

"End": true,

"Parameters": {

"ExpressionAttributeValues": {

":runner_name": {

"S.$": "$.detail.workflow_job.runner_name"

},

":spot_price": {

"S.$": "States.ArrayGetItem($.GetSpotInfo.SpotInstanceRequests[:1].SpotPrice, 0)"

}

},

"Key": {

"id": {

"S.$": "States.Format('{}', $.detail.workflow_job.id)"

}

},

"TableName": "github-events-cfn",

"UpdateExpression": "SET runner_name = :runner_name, spot_price = :spot_price"

},

"Resource": "arn:aws:states:::dynamodb:updateItem",

"Type": "Task"

}

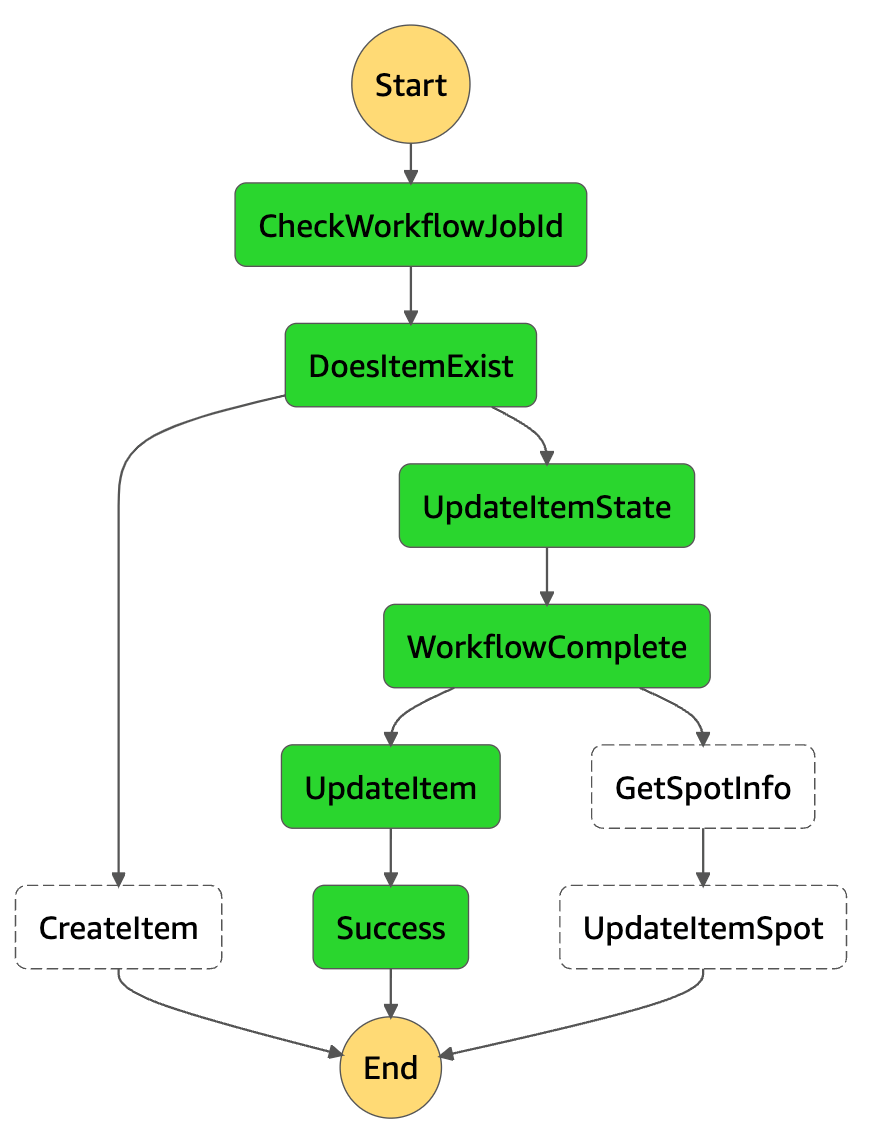

Executions by flow

The above is a little challenging to walk through, so here are the console images for each execution as we process a single workflow_job id.

NOTE:- We don’t care about the order we receive the events, as the start and stop time of the overall workflow execution will be logged into our DynamoDb Items.

First Time a workflow_job Event Received

An in_progress workflow_job Event Received

Completion workflow_job Event Received

The Data

Now we have all this cool workflow happening via our event-driven architecture; we can query the data in our DynamoDb table which looks like this.

{

"id": "11488767300",

"completed_at": "2023-02-21T11:29:16Z",

"github_organization": "sjramblings",

"github_repository": "actions-testing",

"github_sender": "Stephen-Jones",

"runner_name": "i-0fb34f029ef789d38",

"spot_price": "0.105600",

"started_at": "2023-02-21T11:27:19Z",

"type": "workflow_job",

"workflow_name": "build",

"workflow_status": "completed"

}

But I’ll save that for another post.

Summary

Hopefully, this post has shown the power of the native SDK integrations within AWS StepFunctions and what can be achieved without the need for custom Lambda code.

All the Cloudformation to generate this setup is located here.

I’ll leave you with this great thread on serverless.

Hope this helps someone else.

Cheers

https://twitter.com/PaulDJohnston/status/1624762867411984386